Bots called to action to prop up crumbling narrative

All HART articles also on Substack. Please consider a PAID SUBSCRIPTION so we can continue our work. Comments are open so you can join in the conversation.

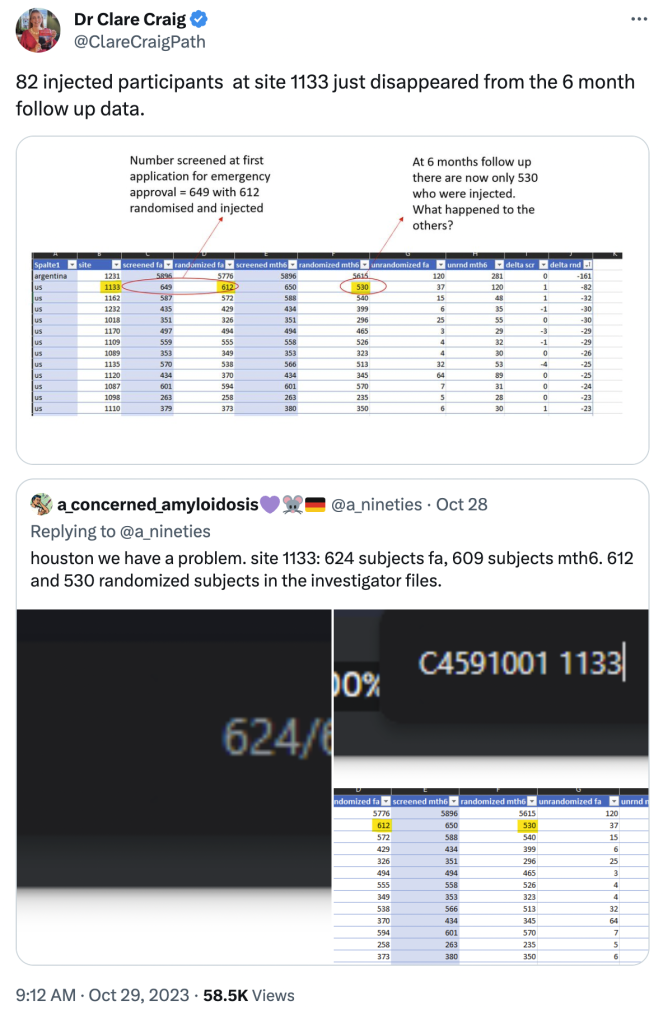

On 29 October 2023 at 09.12 one of Hart’s co-chairs, Dr Clare Craig, tweeted the following:

The content of her tweet (interesting though it is) is not relevant for present purposes.

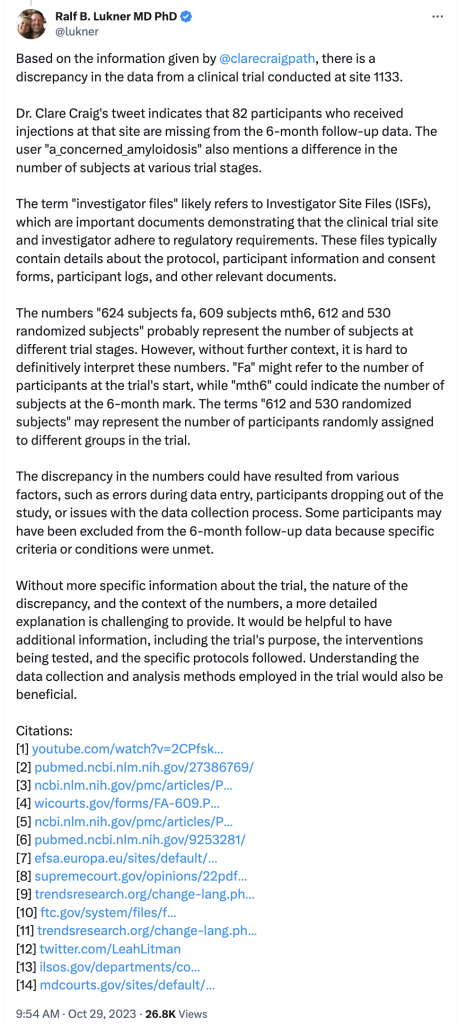

42 minutes later, a Ralf Lukner MD PhD responded as follows.

At first glance, a well-structured and certainly well-cited response. The mark of someone truly on top of his game, to be able to respond in such a considered and fully evidenced manner.

But, read the tweet and it actually makes very little sense. It’s in English, but somehow never gets to the point. The sentences don’t really relate to each other, and there’s no overarching message.

In fact, the style of this resembles a conversation with ChatGPT.

Delving into this a little further, the stigmata of AI are there for all to see.

Firstly, the account owner described himself as an internist in Texas. Aside from the fact that it’s unlikely in the extreme that such a “jobbing” doctor would have the breadth of knowledge to be able to produce a cogent response (not that it is one), it should also be pointed out that it only took “him” 42 minutes at (for him) 3 AM in the morning to do so.

Moreover, on clicking on the actual “citations” it can be seen that none of them are of relevance to the subject matter of Clare’s tweet. They are not just irrelevant to the very specific nature of the tweet, but also totally irrelevant to covid (apart from the first one).

Clicking on each of the references brings up the following:

- A video of Clare talking about the response to covid in general terms.

- A 2016 paper about Cardiac Amyloidosis

- A 2017 trial about treatments for Macular Edema Due to Central Retinal Vein Occlusion

- A proforma court document from Wisconsin

- A 2010 Cochrane report “Lay health workers in primary and community health care for maternal and child health and the management of infectious diseases”

- A 1997 BMJ article about informed consent in clinical trials

- A 2006 European Food Safety Agency report into tolerable limits for vitamins and minerals

- An Oct 2022 US Supreme Court decision in a case involving twitter and material related to terrorism.

- A non-existent link which generates a security warning

- Another 2022 judgment in a case involving Twitter, this time involving the Federal Trade Commission in a Californian District Court.

- A link to another site generating a security warning

- A link to a twitter profile of a law professor called Leah Litman

- Access denied

- An opinion of the Appeal court of Maryland in a defamation case

Clearly, these tactics are utterly unconvincing scientifically and their appearance must surely be good news. Why? Because the people behind these accounts must know they are generating nonsensical responses. They must also know that these are vastly inferior to human-generated material – however misguided such material might be.

The most likely reason for the use of such automated systems is that they have insufficient resources to respond individually to the volume of material being posted which is oppositional to their favoured narrative. As well as this being caused by a growing number of people becoming aware of the truth about the covid “vaccines”, it could also be a shrinking in the number of people who wish to perjure themselves for hours a day, now it has become obvious that all they are doing is lining the pockets of greedy corporations who seem not to care about the well being of any of the users of their products.

In other words, it reeks of desperation.